How to View Pending Uploads in Dropbox

Camera uploads is a feature in our Android and iOS apps that automatically backs up a user's photos and videos from their mobile device to Dropbox. The feature was first introduced in 2012, and uploads millions of photos and videos for hundreds of thousands of users every mean solar day. People who use camera uploads are some of our about dedicated and engaged users. They care deeply virtually their photo libraries, and wait their backups to be quick and dependable every time. It'southward important that we offering a service they can trust.

Until recently, camera uploads was congenital on a C++ library shared between the Android and iOS Dropbox apps. This library served the states well for a long time, uploading billions of images over many years. However, it had numerous problems. The shared lawmaking had grown polluted with complex platform-specific hacks that fabricated it hard to sympathise and risky to change. This risk was compounded by a lack of tooling support, and a shortage of in-firm C++ expertise. Plus, subsequently more than than five years in production, the C++ implementation was beginning to show its age. It was unaware of platform-specific restrictions on background processes, had bugs that could delay uploads for long periods of fourth dimension, and fabricated outage recovery difficult and fourth dimension-consuming.

In 2019, we decided that rewriting the feature was the best way to offering a reliable, trustworthy user experience for years to come. This time, Android and iOS implementations would be separate and use platform-native languages (Kotlin and Swift respectively) and libraries (such every bitWorkManager andRoom for Android). The implementations could then be optimized for each platform and evolve independently, without being constrained by design decisions from the other.

This post is well-nigh some of the design, validation, and release decisions we made while edifice the new camera uploads feature for Android, which we released to all users during the summer of 2021. The project shipped successfully, with no outages or major issues; error rates went down, and upload performance profoundly improved. If you haven't already enabled photographic camera uploads, you lot should try it out for yourself.

Designing for background reliability

The primary value suggestion of camera uploads is that it works silently in the background. For users who don't open up the app for weeks or fifty-fifty months at a time, new photos should still upload promptly.

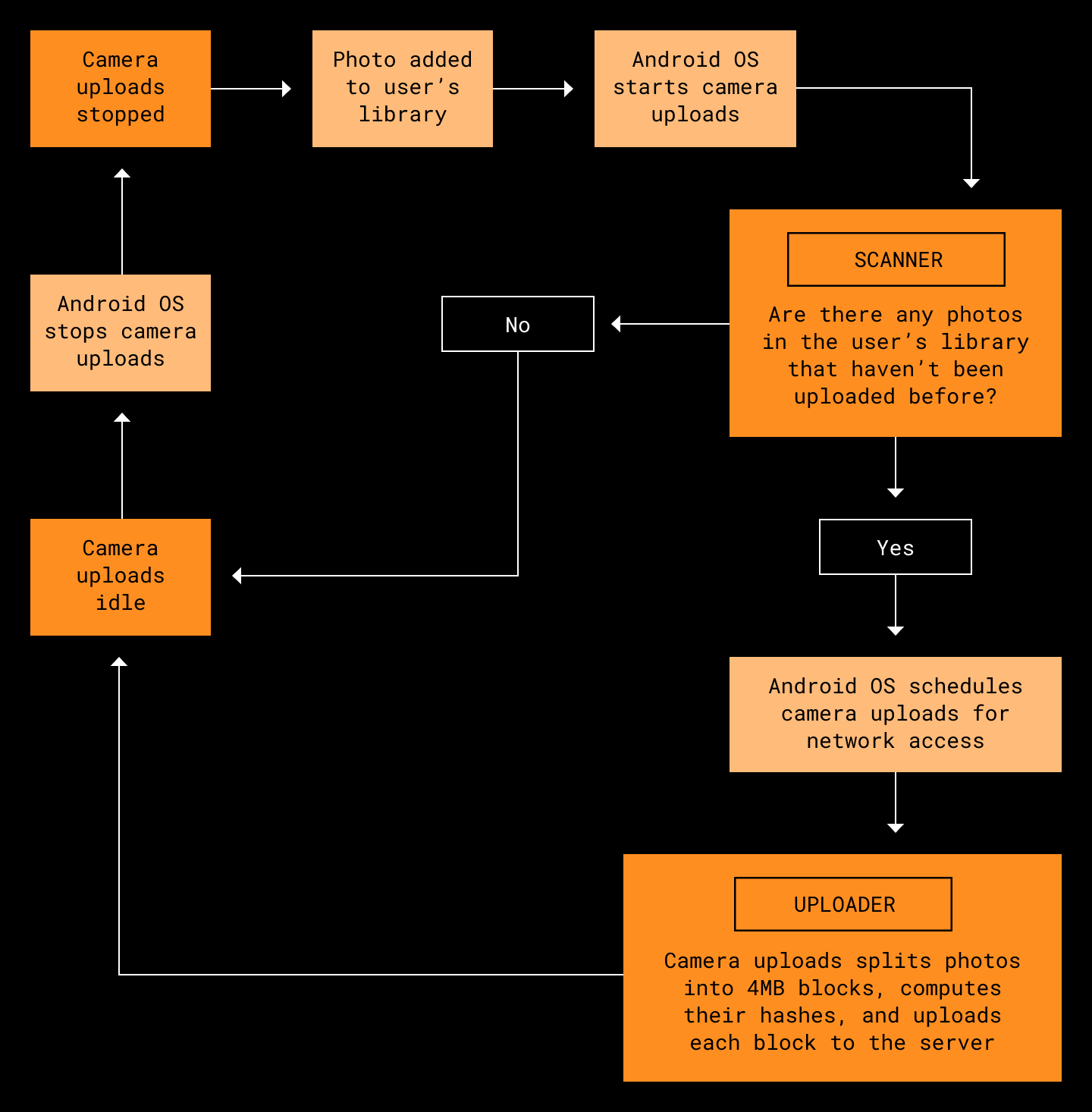

How does this work? When someone takes a new photo or modifies an existing photo, the OS notifies the Dropbox mobile app. A groundwork worker we call the scanner carefully identifies all the photos (or videos) that oasis't yet been uploaded to Dropbox and queues them for upload. Then another groundwork worker, the uploader, batch uploads all the photos in the queue.

Uploading is a two stride process. First, like many Dropbox systems, we break the file into iv MB blocks, compute the hash of each block, and upload each block to the server. One time all the file blocks are uploaded, we make a final commit request to the server with a list of all cake hashes in the file. This creates a new file consisting of those blocks in the user'southward Camera Uploads folder. Photos and videos uploaded to this folder tin can and then be accessed from any linked device.

One of our biggest challenges is that Android places strong constraints on how often apps can run in the background and what capabilities they accept. For example, App Standby limits our background network access if the Dropbox app hasn't recently been foregrounded. This ways we might only be allowed to admission the network for a 10-minute interval once every 24 hours. These restrictions have grown more strict in recent versions of Android, and the cantankerous-platform C++ version of photographic camera uploads was not well-equipped to handle them. It would sometimes try to perform uploads that were doomed to fail because of a lack of network access, or fail to restart uploads during the system-provided window when network access became available.

Our rewrite does not escape these background restrictions; they still apply unless the user chooses to disable them in Android's organisation settings. However, we reduce delays every bit much equally possible past taking maximum advantage of the network admission nosotros exercise receive. We utilize WorkManager to handle these groundwork constraints for u.s., guaranteeing that uploads are attempted if, and only if, network admission becomes available. Different our C++ implementation, we as well practice as much piece of work as possible while offline—for instance, by performing rudimentary checks on new photos for duplicates—earlier asking WorkManager to schedule us for network access.

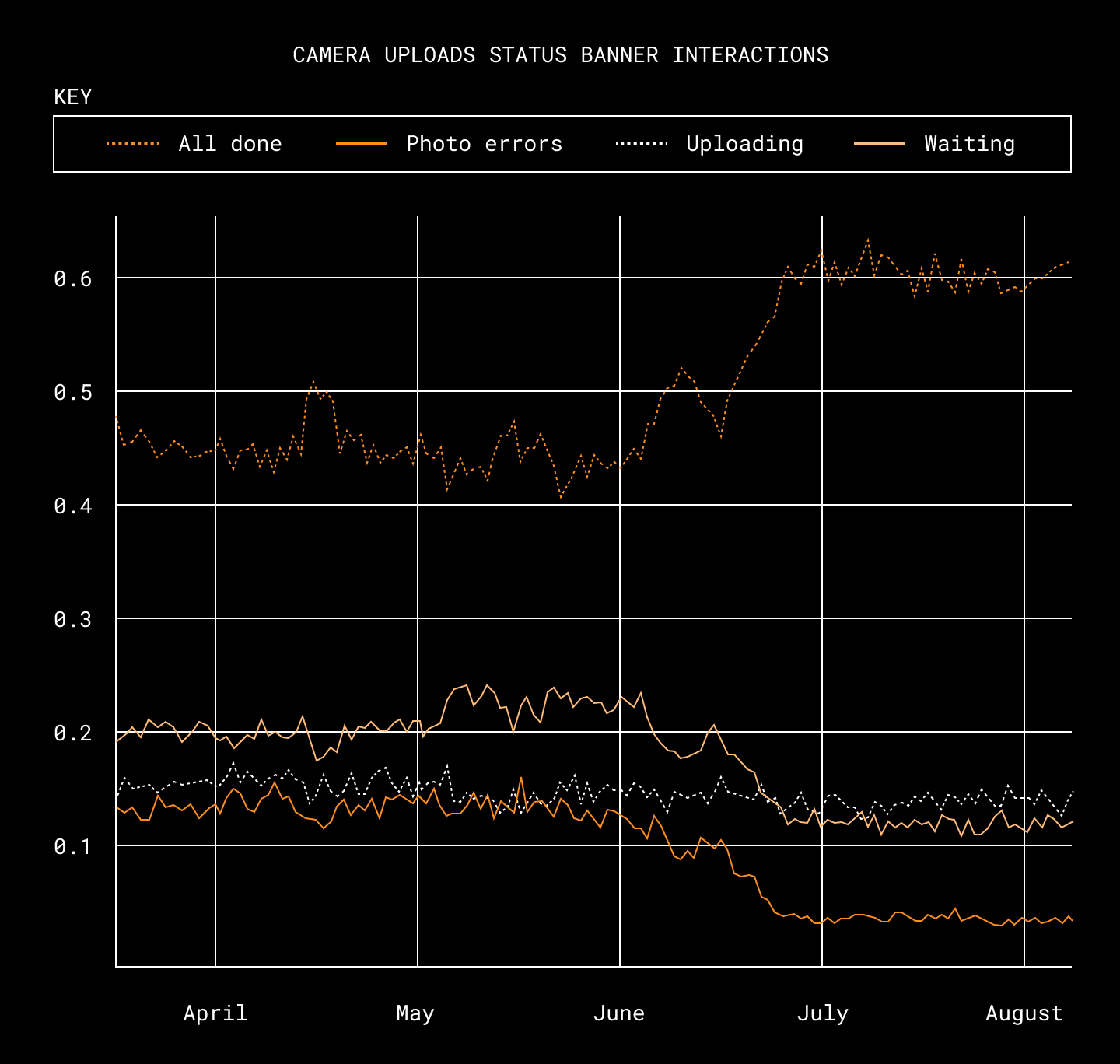

Measuring interactions with our condition banners helps the states identify emerging issues in our apps, and is a helpful signal in our efforts to eliminate errors. Afterwards the rewrite was released, we saw users interacting with more "all done" statuses than usual, while the number of "waiting" or error status interactions went down. (This data reflects only paid users, but not-paying users show similar results.)

To further optimize use of our limited network access, nosotros also refined our handling of failed uploads. C++ camera uploads aggressively retried failed uploads an unlimited number of times. In the rewrite we added backoff intervals between retry attempts, and also tuned our retry beliefs for unlike error categories. If an fault is probable to be transient, we retry multiple times. If information technology's likely to be permanent, we don't bother retrying at all. Equally a issue, nosotros brand fewer overall retry attempts—which limits network and battery usage—andusers see fewer errors.

Designing for performance

Our users don't but expect photographic camera uploads to work reliably. They likewise expect their photos to upload quickly, and without wasting system resource. We were able to make some big improvements here. For case, start-fourth dimension uploads of large photo libraries now finish up to four times faster. At that place are a few ways our new implementation achieves this.

Parallel uploads

Beginning, we substantially improved performance by calculation support for parallel uploads. The C++ version uploaded only one file at a time. Early in the rewrite, nosotros collaborated with our iOS and backend infrastructure colleagues to design an updated commit endpoint with support for parallel uploads.

Once the server constraint was gone, Kotlin coroutines made it easy to run uploads concurrently. Although Kotlin Flows are typically processed sequentially, the bachelor operators are flexible plenty to serve equally building blocks for powerful custom operators that support concurrent processing. These operators can be chained declaratively to produce code that'south much simpler, and has less overhead, than the manual thread direction that would've been necessary in C++.

val uploadResults = mediaUploadStore .getPendingUploads() .unorderedConcurrentMap(concurrentUploadCount) { mediaUploader.upload(it) } .takeUntil { it != UploadTaskResult.SUCCESS } .toList() A uncomplicated example of a concurrent upload pipeline. unorderedConcurrentMap is a custom operator that combines the built-in flatMapMerge and transform operators.

Optimizing memory utilize

Later adding support for parallel uploads, we saw a big uptick in out-of-retentiveness crashes from our early on testers. A number of improvements were required to brand parallel uploads stable plenty for production.

First, we modified our uploader to dynamically vary the number of simultaneous uploads based on the corporeality of available system retentiveness. This manner, devices with lots of memory could enjoy the fastest possible uploads, while older devices would not be overwhelmed. Nonetheless, nosotros were still seeing much higher retentiveness usage than we expected, so we used the memory profiler to take a closer look.

The kickoff thing we noticed was that memory consumption wasn't returning to its pre-upload baseline subsequently all uploads were washed. It turned out this was due to an unfortunate behavior of the Coffee NIO API. Information technology created an in-memory cache on every thread where we read a file, and once created, the cache could never be destroyed. Since we read files with the threadpool-backed IO dispatcher, we typically concluded up with many of these caches, one for each dispatcher thread we used. We resolved this past switching to directly byte buffers, which don't allocate this cache.

The next thing we noticed were large spikes in retentiveness usage when uploading, especially with larger files. During each upload, we read the file in blocks, copying each block into aByteArray for further processing. We never created a new byte array until the previous one had gone out of scope, so we expected just one to be in-memory at a time. Even so, information technology turned out that when we allocated a large number of byte arrays in a brusk time, the garbage collector could not free them quickly enough, causing a transient memory fasten. We resolved this issue by re-using the same buffer for all block reads.

Parallel scanning and uploading

In the C++ implementation of photographic camera uploads, uploading could not start until nosotros finished scanning a user'due south photograph library for changes. To avoid upload delays, each scan only looked at changes that were newer than what was seen in the previous scan.

This approach had downsides. There were some edge cases where photos with misleading timestamps could be skipped completely. If nosotros ever missed photos due to a bug or Os change, aircraft a gear up wasn't enough to recover; we also had to clear affected users' saved scan timestamps to forcefulness a total re-scan. Plus, when camera uploads was showtime enabled, we still had to check everything before uploading anything. This wasn't a great showtime impression for new users.

In the rewrite, nosotros ensured correctness by re-scanning the whole library later every change. We also parallelized uploading and scanning, so new photos tin can start uploading while we're still scanning older ones. This means that although re-scanning tin take longer, the uploads themselves even so kickoff and finish promptly.

Validation

A rewrite of this magnitude is risky to transport. Information technology has dangerous failure modes that might only bear witness upwards at scale, such equally corrupting 1 out of every million uploads. Plus, as with most rewrites, nosotros could non avoid introducing new bugs because we did non understand—or even know about—every edge example handled past the former system. We were reminded of this at the offset of the project when we tried to remove some ancient camera uploads lawmaking that we thought was dead, and instead ended upwards DDOSing Dropbox's crash reporting service. 🙃

Hash validation in production

During early evolution, nosotros validated many depression-level components by running them in production alongside their C++ counterparts and and then comparing the outputs. This let us confirm that the new components were working correctly before we started relying on their results.

Ane of those components was a Kotlin implementation of the hashing algorithms that nosotros apply to place photos. Because these hashes are used for de-duplication, unexpected things could happen if the hashes alter for fifty-fifty a tiny percent of photos. For instance, we might re-upload onetime photos believing they are new. When nosotros ran our Kotlin code alongside the C++ implementation, both implementations near ever returned matching hashes, merely they differed about 0.005% of the fourth dimension. Which implementation was wrong?

To answer this, we added some additional logging. In cases where Kotlin and C++ disagreed, nosotros checked if the server subsequently rejected the upload because of a hash mismatch, and if and then, what hash information technology was expecting. We saw that the server was expecting the Kotlin hashes, giving u.s. high conviction the C++ hashes were wrong. This was peachy news, since it meant nosotros had fixed a rare bug nosotros didn't even know we had.

Validating state transitions

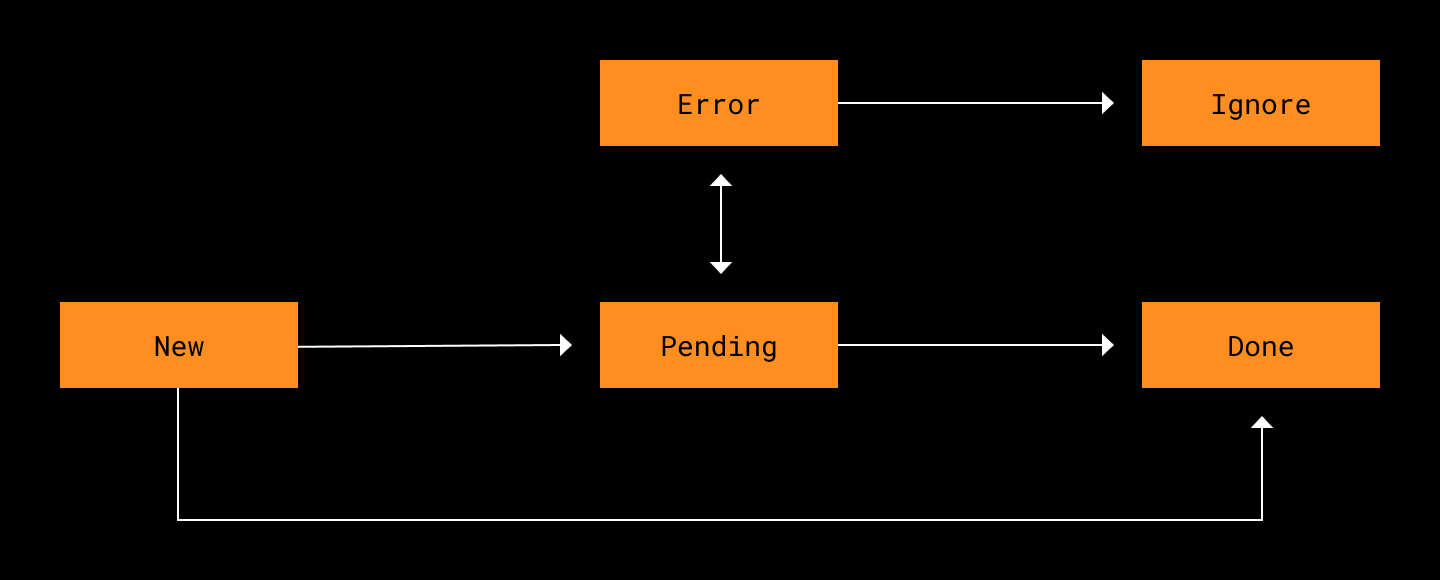

Camera uploads uses a database to track each photo's upload state. Typically, the scanner adds photos in state NEW and then moves them to PENDING (or Washed if they don't need to be uploaded). The uploader tries to upload PENDING photos and then moves them to Washed or ERROR.

Since nosotros parallelize and so much piece of work, it's normal for multiple parts of the organization to read and write this state database simultaneously. Individual reads and writes are guaranteed to happen sequentially, but we're nonetheless vulnerable to subtle bugs where multiple workers try to alter the state in redundant or contradictory means. Since unit tests only cover single components in isolation, they won't take hold of these bugs. Even an integration test might miss rare race atmospheric condition.

In the rewritten version of camera uploads, we guard confronting this by validating every state update against a ready of allowed state transitions. For instance, we stipulate that a photo can never movement from Fault to DONE without passing back through Awaiting. Unexpected state transitions could indicate a serious bug, so if nosotros see i, nosotros stop uploading and written report an exception.

These checks helped us notice a nasty bug early in our rollout. We started to see a high book of exceptions in our logs that were acquired when camera uploads tried to transition photos fromDONE toDONE. This made us realize nosotros were uploading some photos multiple times! The root cause was a surprising beliefs in WorkManager whereunique workers can restart before the previous instance is fully cancelled. No duplicate files were being created because the server rejects them, but the redundant uploads were wasting bandwidth and fourth dimension. One time we fixed the issue, upload throughput dramatically improved.

Rolling information technology out

Even afterward all this validation, we even so had to be cautious during the rollout. The fully-integrated organization was more than circuitous than its parts, and we'd likewise demand to argue with a long tail of rare device types that are non represented in our internal user testing pool. Nosotros likewise needed to continue to meet or surpass the high expectations of all our users who rely on camera uploads.

To reduce this risk preemptively, we made sure to back up rollbacks from the new version to the C++ version. For instance, we ensured that all user preference changes fabricated in the new version would apply to the sometime version too. In the end we never ended up needing to roll dorsum, just it was nonetheless worth the effort to take the option available in example of disaster.

We started our rollout with an opt-in pool of beta (Play Store early access) users who receive a new version of the Dropbox Android app every calendar week. This pool of users was big enough to surface rare errors and collect central operation metrics such every bit upload success rate. We monitored these primal metrics in this population for a number of months to gain confidence it was ready to ship widely. We discovered many problems during this fourth dimension menstruation, but the fast beta release cadence allowed united states of america to iterate and fix them quickly.

Nosotros also monitored many metrics that could hint at future bug. To make sure our uploader wasn't falling behind over time, we watched for signs of ever-growing backlogs of photos waiting to upload. Nosotros tracked retry success rates by error type, and used this to fine-tune our retry algorithm. Final but non least, nosotros also paid close attention to feedback and support tickets we received from users, which helped surface bugs that our metrics had missed.

When we finally released the new version of camera uploads to all users, information technology was clear our months spent in beta had paid off. Our metrics held steady through the rollout and we had no major surprises, with improved reliability and depression error rates right out of the gate. In fact, we ended up finishing the rollout alee of schedule. Since nosotros'd forepart-loaded so much quality comeback work into the beta catamenia (with its weekly releases), we didn't accept any multi-week delays waiting for disquisitional bug fixes to coil out in the stable releases.

And so, was information technology worth it?

Rewriting a big legacy characteristic isn't always the right decision. Rewrites are extremely fourth dimension-consuming—the Android version lone took 2 people working for 2 total years—and can easily crusade major regressions or outages. In guild to exist worthwhile, a rewrite needs to evangelize tangible value by improving the user experience, saving engineering time and attempt in the long term, or both.

What advice do we take for others who are offset a project like this?

- Define your goals and how you will measure them. At the outset, this is important to make sure that the benefits will justify the effort. At the terminate, it will assistance you decide whether you got the results y'all wanted. Some goals (for example, future resilience against OS changes) may not exist quantifiable—and that's OK—but it's good to spell out which ones are and aren't.

- De-hazard it. Identify the components (or system-wide interactions) that would cause the biggest problems if they failed, and baby-sit against those failures from the very start. Build critical components offset, and try to examination them in product without waiting for the whole system to be finished. It'south too worth doing extra work upwards-front in order to be able to gyre back if something goes incorrect.

- Don't rush. Shipping a rewrite is arguably riskier than aircraft a new feature, since your audience is already relying on things to piece of work as expected. Start by releasing to an audience that'due south just big plenty to give you the information you demand to evaluate success. Then, watch and wait (and fix stuff) until your data give you confidence to keep. Dealing with bug when the user-base of operations is pocket-size is much faster and less stressful in the long run.

- Limit your telescopic. When doing a rewrite, it's tempting to tackle new characteristic requests, UI cleanup, and other backlog piece of work at the same time. Consider whether this will actually be faster or easier than shipping the rewrite first and fast-following with the rest. During this rewrite nosotros addressed issues linked to the core architecture (such as crashes intrinsic to the underlying data model) and deferred all other improvements. If you change the feature too much, not only does it take longer to implement, but it'south as well harder to notice regressions or roll back.

In this instance, we feel expert about the conclusion to rewrite. Nosotros were able to meliorate reliability correct abroad, and more importantly, we set ourselves upward to stay reliable in the future. As the iOS and Android operating systems continue to evolve in divide directions, it was only a affair of time before the C++ library broke badly enough to require fundamental systemic changes. Now that the rewrite is complete, we're able to build and iterate on camera uploads much faster—and offering a amend experience for our users, as well.

As well: Nosotros're hiring!

Are you a mobile engineer who wants to make software that'due south reliable and maintainable for the long haul? If so, nosotros'd love to have y'all at Dropbox! Visit our jobs page to see current openings.

Source: https://dropbox.tech/mobile/making-camera-uploads-for-android-faster-and-more-reliable

0 Response to "How to View Pending Uploads in Dropbox"

Post a Comment